When A.I. took over the world in 2022, I kept noticing sparkles.

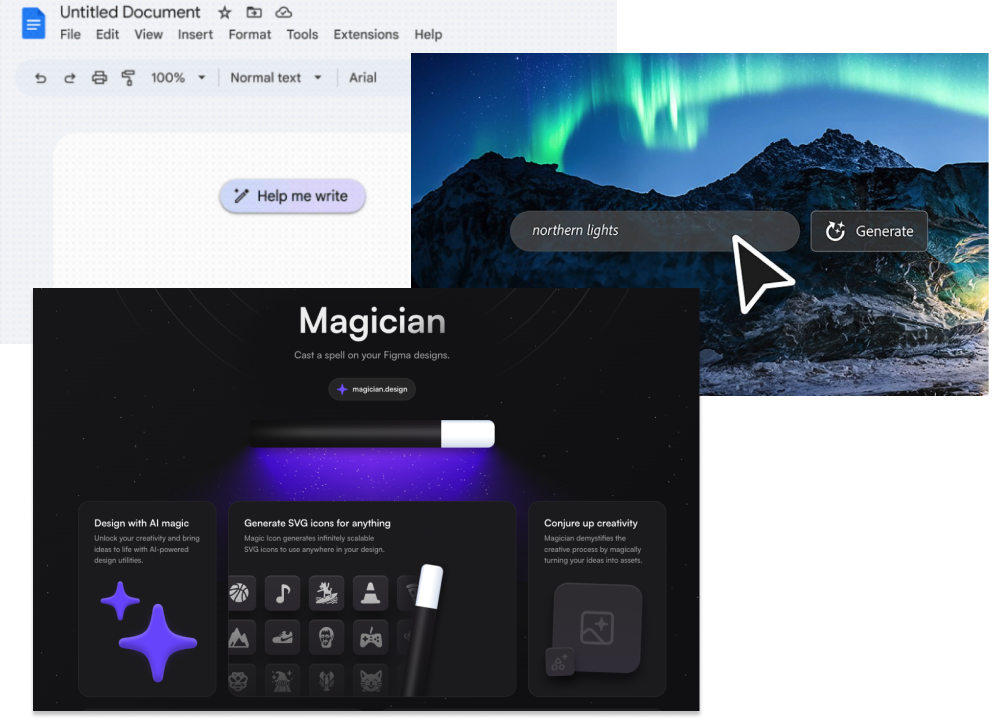

Tech companies like OpenAI were inventing the practical and legal mechanisms of artificial intelligence as they went along — “Oh, you don’t want us to scrape your data? Here’s some code you can add to your site (but we already scraped it)” — and designers were inventing new signifiers and conventions for the nascent technology. And one that everyone seemed to arrive at organically was the four-pointed sparkle icon ✨ to denote the presence of AI.

I hereby declare ”✨” as the official symbol for AI pic.twitter.com/rpptCiQYZT

— Fons Mans (@FonsMans) January 26, 2023

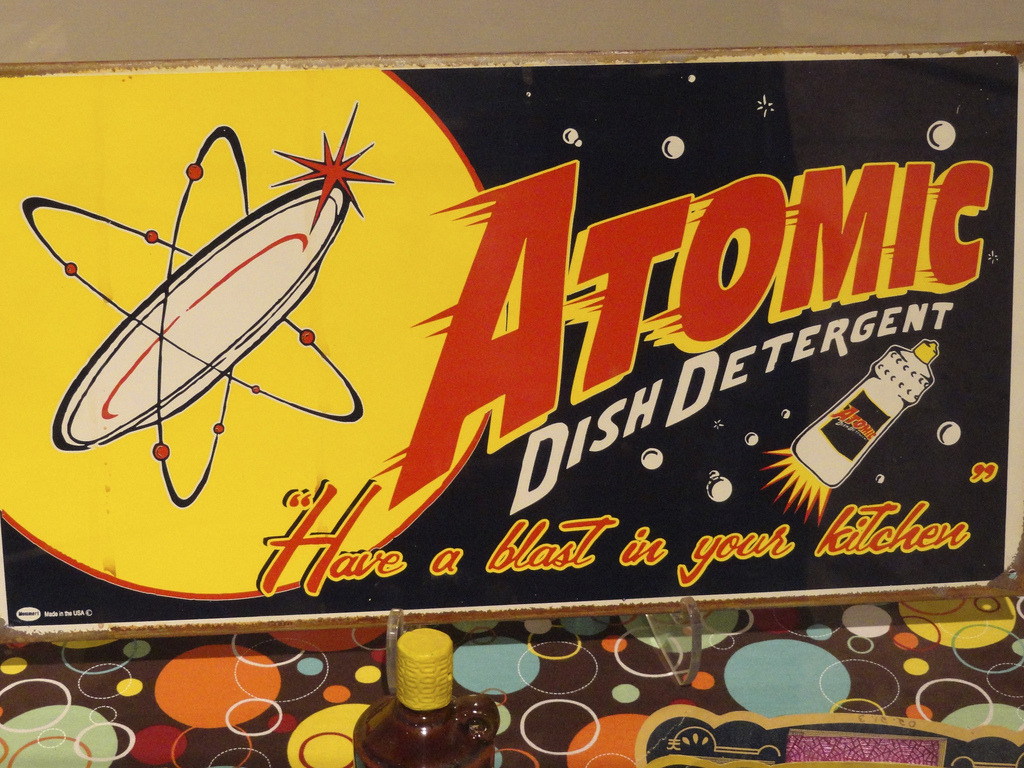

It’s a marketing move for sure, suggesting that the software can solve our problems and make sense of a chaotic world in a jiffy. It’s also a fitting symbol for a technology whose inner workings even A.I. researchers can’t explain with total certainty. Daley Wilhelm shared a theory last March that the sparkle could be a descendent of atomic age design: in design artifacts from the 1950s, we see sparkles on George Jetson’s flying car and in nuclear advertising. But in those cases, I see the sparkle as a vibrating atom, a reflection off the surface of a glistening future that never came to pass. But today, sparkles mean magic.

And sometimes A.I. does feel like magic! When you’re writing and the words keep appearing after your fingers leave the keyboard, continuing your thoughts or optimizing your ideas into SEO copy. Or when you press enter in Midjourney and watch your images materialize out of the ether, from fuzzy shapes to photographic clarity. Those gee-whiz moments will become routine, but the rush of articles, interviews, legislation, and other attention to generative A.I. suggests that many people recognize the allure and danger of the unknown.

I present you a newly crafted magical word: ECHOTWIRL

— Ana Franco (@anafrancoAI) May 25, 2023

Look at some examples on this thread, unleash your creativity and show me the results! pic.twitter.com/KQhN2NgxuA

Should we, as thoughtful and boundless humans, feel offended by the technocratic appropriation of magic? After all, magic has been with us for a long time and has roots in some of the most humanistic of rituals and beliefs. When so-called A.I. artists use magical words like “echtotwirl” to generate images, or A.I. chatbots mimic the personalities of friends or celebrities, I can safely say that, yes, that is stupid. But the hope for automating vast swathes of intellectual labor is that it will lead to a shorter work-week and a life rich with non-economic meaning. And there A.I. might just offer some revelations of consciousness and knowledge, new art forms, or yet-to-be-imagined experiences. Whether casting “spells” with prompts or “manifesting” the lives we want through viral posts on social media, I was curious how the history of magic can inform its present intersection with technology and the arts.

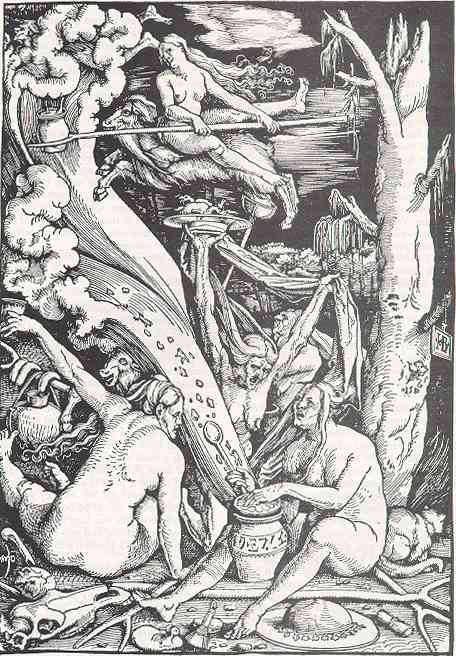

During the Enlightenment, Victoria Nelson tells us "the magus — the creator of magic [...]— found his role as manipulator of the forces of nature usurped by the scientist.” Before that, in Medieval Europe, magic had been a catchall for spiritual practices and folk knowledge that existed outside of Christianity. As science and philosophy grew as systems for understanding the world, magical practices were categorized into rational professions. "The good magus role,” Nelson writes, “was ultimately absorbed into the figures of the artist and writer; the bad magus role, with its supernatural powers linked to the... underworld, was absorbed into the figure of the scientist.”

Art plumbed the depths of the self through Modernism and beyond, and continues to offer a kind of spiritual meaning in today’s world of dwindling religion. Many artists would be happy to call their work magical! To claim mastery of infinite inner and outer space — and indeed, artist-scientists, hackers, and the avant-garde do sometimes discover new frontiers of physical invention.

But the external world is a lot easier to measure, parcel, and engineer, and so it has been colonized by scientists over the past few centuries. Thinking about artists and teachers working with technology (👋 hello!), I was particularly struck by Nelson’s characterization of the mad scientist, who “represents a disguised fear of sorcery.”

Occultist researcher James Webb (no relation to the telescope), described magic as a “rejected knowledge” in opposition to the measurable, scientific domain. Magic contained things that are subjective, interior, and often Non-Western, he argued, along with other magic historians like Gary Lachlan.

Much of this writing comes from the excellent anthology Magic from the Documents of Contemporary Art series, and I’ll give you one more to help frame the present moment of AI.

Erik Davis compared magic not to physical sciences of the Enlightenment, but to cyberspace. In 1994, he wrote that “complexity space is complexity space — any information system, when dense and rigorous enough, takes on a kind of self-organizational coherence which resonates with other systems of complexity.”

People always grasp for metaphors when trying to understand new technology (e.g. files and folders), but Davis saw more specific parallels between computers and magic:

Magical texts consist of endless lists of [demons], their appearances, numbers, and powers... These agents mediate the complexity of supercelestial information. They are the original image of artificial intelligence… the original text-based expert systems, independent software objects… passionless entities made of intelligent light.

The use of the word “agent” should stick out to anyone paying attention to current A.I. discourse, as systems like ChatGPT are now capable of completing complex tasks under specific guidelines, at again, we reach for the analogy of a personal assistant or intelligent agent. The idea of an AI agent originated in the mid-90s too, as computer scientists anticipated our current technologies. But it was Davis who saw such programs as decedents of magical “demons,” conjured through text-based spells.

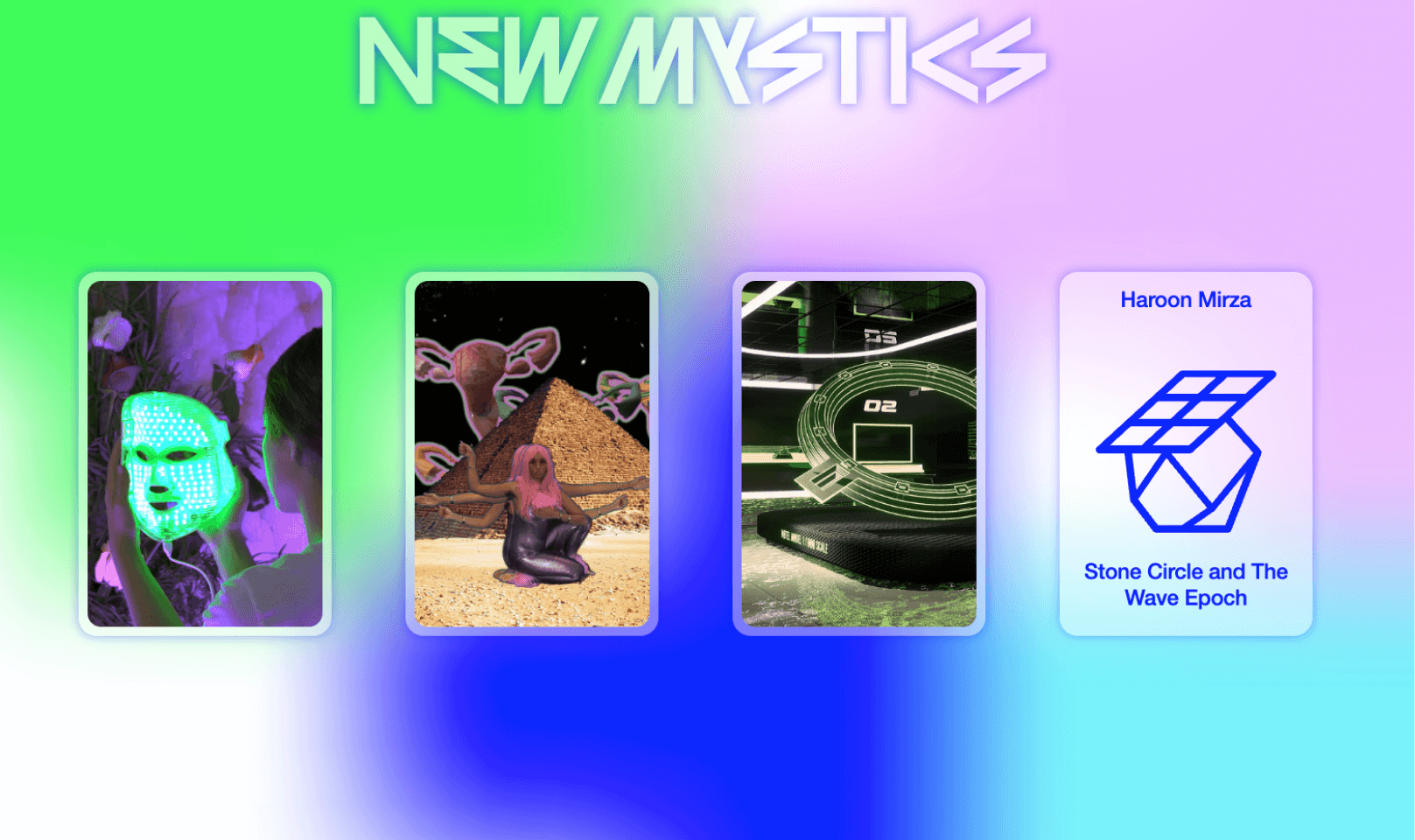

Since the dawn of the web, new media artists have relished the mystique of digital technology and global networks. The contemporary artist collective New Mystics, led by Alice Bucknell, collects such artists, who play with the imagery and culture of “rejected knowledge” and the occult: bones tan jones’s painted artifacts and flute performances, Sadia Pineda Hameed’s and Beau W Beakhouse’s “technical talisman” board, or Ian Cheng’s primeval landscapes populated by algorithmic creatures.

Bucknell’s manifesto (PDF) for the group explains that:

“The New Mystics [est. 2021] share a psychedelic aesthetic characterized by dimly lit digital landscapes, ambient soundtracks, technicolor palettes, and cosmic symbolism. [...] These artists are using the atmospheric potential of new technology to resurrect ancient belief systems bleached out of history”

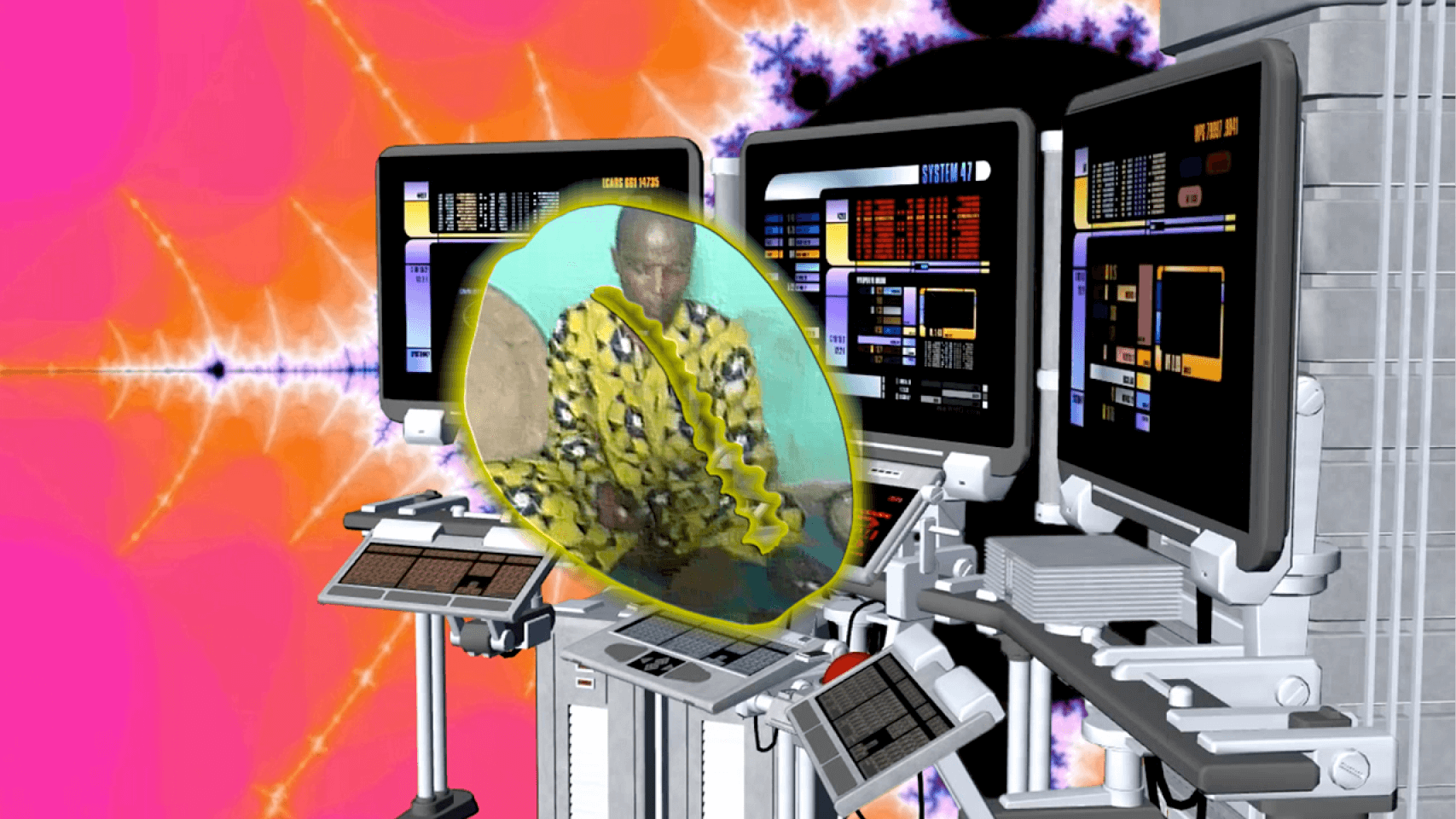

With such an eclectic mix of artwork, it’s hard to overlook the New Age kitsch, but much of the work does sincerely grapple with nonwestern traditions and research, like Tabita Rezaire’s 2017 video, Premium Connect (pictured at the top of this post), which explores “mystico-techno-consciousness” inspired by conversations with Nigerian philosopher Sophie Oluwole.

The conjunction of tech and psychedelic language calls to mind the trend of microdosing LSD in Silicon Valley, which is perhaps another echo of the semi-spiritual figure of the magus, and its shift into scientific invention. This brings us back to the question of whether AI actually taps into hidden mysteries of the universe — does it deserve the sparkle? ✨

Famed occultist Alistair Crowley wrote, surprisingly, that “it is immaterial whether [magical entities] exist or not. By doing certain things, certain results follow.” His advice from 1929 certainly resonates with the apparent preference of OpenAI on results over understanding. It also reminds me of another recurring subject of today’s AI arguments, and one that I’m inclined to think about due to my own job: education.

To some extent teachers follow Crowley’s mantra of “By doing certain things, certain results follow.” Lesson plans, pedagogical approaches, and the simple homework assignment are all concerned with the future (of the students). Teachers case spells just as much as programmers, and whether it’s neuroscience of magic, we hope to know what results will follow certain actions.

The best moments in a classroom feel “like magic,” which is a similar usage of the word to the sparkle icon (“it just works”) but one that emphasizes affect over results. Perhaps that’s what the designers of today’s AI platforms are aiming for — the feeling of magic — but so much attention is paid to the measurable impacts and results instead. Will AI destroy this industry or that one? Will it ruin English class forever? Those are important questions, but especially in the context of the arts, I think teachers should look for opportunities to make AI-in-the-classroom feel magical.

To that end, here are some ideas for teaching strategies inspired by this dive into magic and technology:

- Bring “rejected traditions” into the classroom.

- Muddle systems together or try unusual analogies.

- Be a magician: conceal information or structure lessons around big reveals.

- Acknowledge moments that feel “like magic” and recreate them.

- Use technology as an “agent” to improve efficiency or increase powers.

- Foster liminal spaces outside the constraints of everyday life.

- Reveal the inner working of technology to, to “demonstrate how the machine works so that one is not duped by its workings, but revels in the innovation of their own fantastic complex." (Aaron Gach, Center for Tactical Magic)